Training set positions

These positions were solved by actually trying out several combinations

of angle and velocity and finding their relative utility through the reinforcement

function.

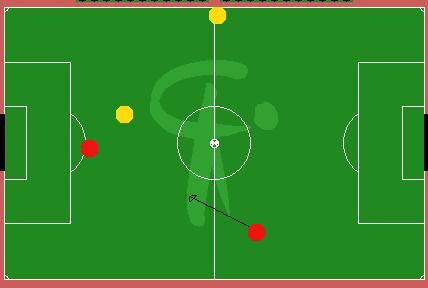

A practical point that affects the learned agent behaviour in dribbling

must be noted - the interception skill is much more difficult from close

range than it first appears. If the ball is moved very close and fast past

a defender it gets only a very rough idea of the velocity and is left turning

at its position. This was a weakness of our interceptor module and is automatically

exploited by the learning dribbler. Several of the examples below show

the tendency of the dribbler to hit the ball hard close to an agent and

dash past it before it can turn.

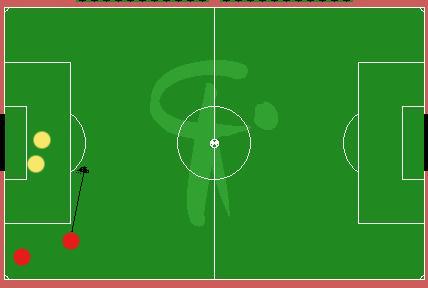

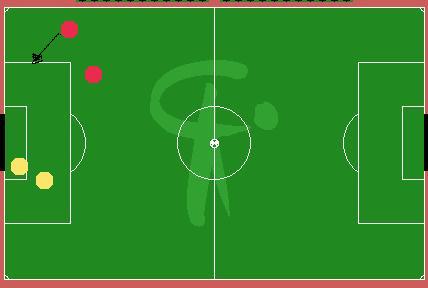

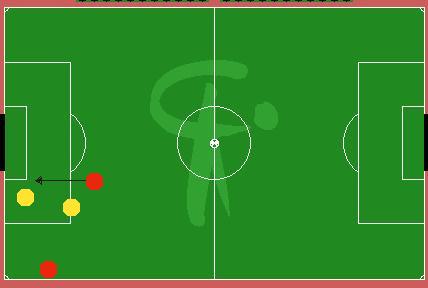

Fig 1: The agent tries to centralize himself since the direct path to goal is blocked and the probability of scoring a goal is more when shooting from the centre.

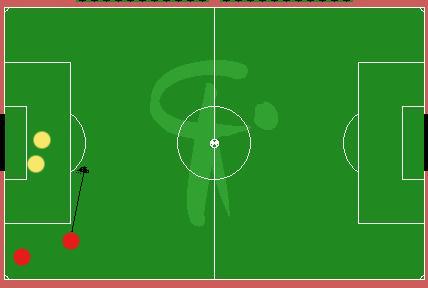

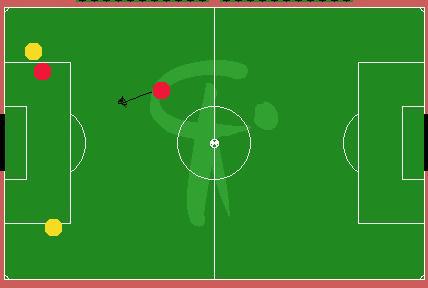

Fig 2: The agent dribbles the ball away from the defenders who are both on one side

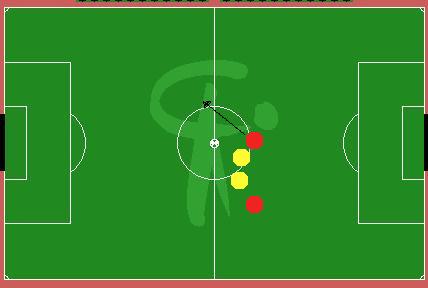

Fig 3: The agent's primary objective is to move quickly towards the opponent goal. Note that this example demonstrates the poor interceptability of the ball when kicked hard and close to a defender.

Rules learnt

These consists of the centres in the hidden layer of the radial basis function.

Since these centres are derived by clustering the input and output spaces,

one expects these centres to be representative points in this space. They

can thus be considered the rules learnt in the training process. Every

new position encountered after the training stage is evaluated by considering

its distance from these centres.

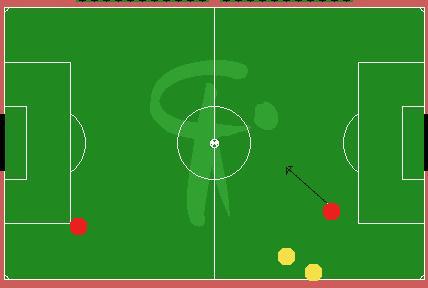

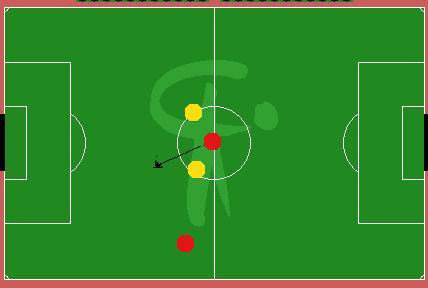

Fig 4: The agent finds the shortest path to goal

Fig 5: The agent sees a gap and rushes to goal

Fig 6: The defenders are far away. The agent dribbles at higher speed to cover ground quickly.

Performance in novel positions

As was mentioned earlier, the relatively high number of centres used in the generalization process improve the performance of the trained RBFN at the training set instance at the cost of other locations in the input space.

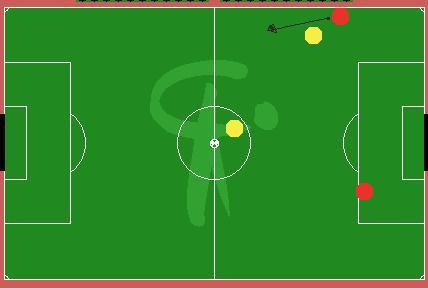

Fig 7: The agent kicks at an angle rather than right through the centre. This works with our interceptors the ball is very close to one defender and relatively far away from the other.

Fig 8: The agent does not possess sufficient accuracy in such positions. However, here the agent would be better off shooting rather than dribbling the ball further.

Fig 9: The agent figures out that it is preferable to move away from defenders while still moving towards the opponent goal.