Assignment 1

Group E

Here are the slides of the presentation.

Summaries of the discussions

Thought experiment: Chinese Room Argument

A room has a person who doesn't understand Chinese and a rule book which enables one to answer Chinese questions in Chinese. Native Chinese speakers outside this room ask questions and the person inside answers them. So the person answers these questions just like a native chinese speaker but doesn't understand(attach meaning to) any of the questions.

Strong AI

The claim of Strong AI: Thinking is just manipulation of formal symbols, which is what the computer does. Therefore, some machine can perform any intellectual task that a human being can.

Ferdinand de Saussure (1916): Nature of the linguistic sign

Simple view of language:-

Language seen only as set of words, which directly correspond to real-world concepts.

Criticism:-

Above view assumes that real-world concepts exist before language.

Rather, the words and the concepts are very strongly associated.

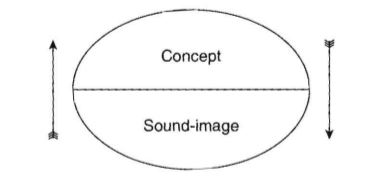

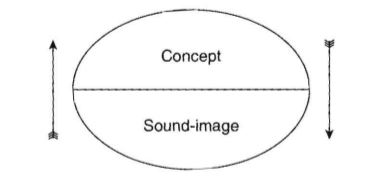

Thus, it is not just the association of words and concepts, rather it is the unification of the concept and the sound-image(the "psychological imprint" left by the words on our minds)

de Saussure says that these two are very strongly united, and calls this whole the linguistic "sign", where the sound-image is the signified and the concept the signifier

The bond between the signified and the signifier is arbitrary, i.e. it arises because of the arbitrary association of the particular signifier with the particular signified.

de Saussure says that these two are very strongly united, and calls this whole the linguistic "sign", where the sound-image is the signified and the concept the signifier

The bond between the signified and the signifier is arbitrary, i.e. it arises because of the arbitrary association of the particular signifier with the particular signified.

Charles Sanders Peirce (1932): The sign: icon, index, and symbol

Signs are of three types:-

Icon: Physically resembles the object it stands for.

Visual icons might be very simple drawings resembling objects. For example, a little fan-like picture is usually drawn on the regulator of a fan. It is the icon of the fan.

Words may also be iconic. For example, whoosh, crash, crack are words that resemble the sounds they stand for.

Index: Correlates with the object it stands for.

Body language is a very strong sign of the psychological state of a person/animal, hence an index.

Monuments as indices of geographical regions. For example, the Eiffel Tower may be an index of Paris, or France. Taj Mahal may be an index of Agra, or India.

Symbol: Completely arbitrarily associated with the object it stands for.

Most words in natural languages are symbols. For example, table, chair, bed.

Religious symbols. For example, the Cross(Christianity), the Star(Judaism).

John Searle (1990): Is the Brain's mind a computer program

Can the person in the Chinese room "understand"(associate meaning with) Chinese?

Searle' s argument -

Axiom 1 - Program are syntactic

Axiom 2 - Minds have mental contents(semantics)

Axiom 3 - Syntax is not enough to convey semantics. Syntax by itself is neither constitutive of nor sufficient for semantics.

Using above three axioms, we can derive

Programs are just syntax, hence cannot convey semantics(A1 and A3). For, minds we do need semantics(A2).

Conclusion 1 - Programs are neither constitutive of nor sufficient for minds.

Can our brains be solely a computer program running?

Searle's argument -

Axiom 4 - Brains cause minds. They have some "causal powers".

Conclusion 2 - He says that from axiom 4, we derive "immediately" and "trivially" that any system that can cause these minds should have these equivalent "causal powers".

Conclusion 3 - Since no program can produce a mind(C1), and "equivalent causal powers" produce minds(C2), it follows that programs do not have "equivalent causal powers." Hence, our brain is not solely a computer program running.

He does not explain why a brain has these "equivalent "causal powers" which causes mental contents(i.e. semantics).

Harnad (1990): The Symbol Grounding Problem

The Symbol Grounding Problem: How can the semantics of a formal symbol system be associated intrinsically with the system and how the meaningless symbol tokens when manipulated only on the basis of their shape, be grounded in the mind?

A formal symbol system can be made based on 8 necessary and sufficient rules.

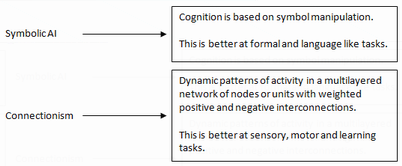

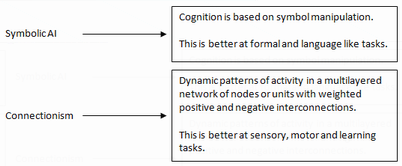

There are 2 approaches in understanding- symbolic AI and connectionism (or neural networks) or their combination

1. Chinese Room Argument:

Challenges the core assumption of symbolic AI that a symbol system able to generate behavior indistinguishable from that of a person must have a mind.

2. Chinese/Chinese dictionary-go-round:

It argues that you cannot learn chinese just by having chinese dictionary as it contains a group of symbols representing another set of groups of symbols.

Human brain has the capacity to discriminate and identify. Consider the symbol "horse." We are able to tell individual horses apart, and even judge the similarity of two horses. This is called "discrimination." On the other hand, if we see a horse and can reliably call it a "horse", we are doing "identification."

Cognitive processes involve:

Iconic - the perceptual and sensory part

Categorical - distinguishing some unique features

Symbolic - it makes possible that the grounded set of elementary symbols when combined in different combinations, produce intrinsic grounding of that newly formed set.

Iconic and Categorical representations do the identification and discrimination. Both iconic and categorical representations are nonsymbolic. The former are just the similar copies of the sensory projection. They preserve the exact shape of the element that is percieved. The latter are only the icons that have been selectively filtered to preserve only some of the features of the shape of the sensory projection. These features distinguish members from nonmembers in a given category.

1. Chinese Room Argument:

Challenges the core assumption of symbolic AI that a symbol system able to generate behavior indistinguishable from that of a person must have a mind.

2. Chinese/Chinese dictionary-go-round:

It argues that you cannot learn chinese just by having chinese dictionary as it contains a group of symbols representing another set of groups of symbols.

Human brain has the capacity to discriminate and identify. Consider the symbol "horse." We are able to tell individual horses apart, and even judge the similarity of two horses. This is called "discrimination." On the other hand, if we see a horse and can reliably call it a "horse", we are doing "identification."

Cognitive processes involve:

Iconic - the perceptual and sensory part

Categorical - distinguishing some unique features

Symbolic - it makes possible that the grounded set of elementary symbols when combined in different combinations, produce intrinsic grounding of that newly formed set.

Iconic and Categorical representations do the identification and discrimination. Both iconic and categorical representations are nonsymbolic. The former are just the similar copies of the sensory projection. They preserve the exact shape of the element that is percieved. The latter are only the icons that have been selectively filtered to preserve only some of the features of the shape of the sensory projection. These features distinguish members from nonmembers in a given category.

Cangelosi, Greco and Harnad (2002): Symbolic Grounding and Symbolic Theft Hypothesis

Each symbol is the part of a more complex system. The system is governed by a set of rules called syntax.

Models of language and cognition must include a link between some basic symbols and real-world objects. These basic symbols must be grounded in the mind(in the form of categories). Computational models of categorization, symbol grounding transfer and language acquisition described in the paper are based on neural networks. These models perform categorizations very easily.

Denning (1990): Is Thinking Computable?

Denning presents various views on whether machines can be intelligent:-

Searle's views:

Take the chinese room experiment. Even though the system consisting of the person and the rule-book manipulates Chinese symbols as perfectly as a native speaker would, no understanding of the symbols is going on. Similarily, the fact that some computer can manipulate symbols doesn't necessarily mean that it can "think". This negates the Strong AI claim.

Thus passing the Turing test is not a sufficient condition for conscious intelligence.

In fact, no algorithm can be intelligent in principle(see discussion on Searle's paper above)

The Churchlands' views:

Agree with Searle that Turing test is too "weak" for conscious intelligence.

But since brains are just extremely complicated webs of neurons and nothing too special, a sufficiently powerful computer can in principle do the same tasks as a human brain and hence have the same kinds of experiences.

Penrose's views:

Penrose agrees with the others on the "weakness" of the Turing test.

But he also says that mental processes are inherently more powerful than computational processes.

He says that minds have the gift of creative thinking, whereas machines can only do logical deduction. A lot of the problem solving that humans do cannot be done by any general algorithm, so computers will never be able to do what humans do. He thinks that computers will never be able to pass the Turing test.

Denning says that a fixed interpretation cannot include all phenomena. We humans step outside a particular interpretation and devise alternatives, which no algorithm can do, because algorithms are nothing but fixed interpretations. He believes that even though we might be able to build systems that do increasingly complicated tasks, we will never be able to make something that supercedes our own capacities.

Another summary of the same paper

References:

- Saussure, Ferdinand de; Nature of the Linguistics Sign; In Charles Bally & Albert Sechehaye (Ed.)(1916), Cours de linguistique générale, McGraw Hill Education. ISBN 0-07-016524-6.

- Charles Sanders Peirce; The Collected Papers of Charles Sanders Peirce: Vol. II, ed. Charles Hartshorne and Paul Weiss, pp. 135, 143-4, 169-73.

- John R. Searle; Is the Brain's Mind a Computer Program?; In Scientific American, January 1990, pp. 26--31

- Harnad, S.; The Symbol Grounding Problem; (1990); Physica D 42: 335-346.

- Angelo Cangelosi, Alberto Greco and Stevan Harnad; Symbol Grounding and the Symbolic Theft Hypothesis; In Cangelosi A & Parisi D (Eds) (2002). Simulating the Evolution of Language. London: Springer

- Peter J. Denning; The Science of Computing: Is Thinking Computable?; American Scientist, Vol. 78, No. 2 (March-April 1990), pp. 100-102

de Saussure says that these two are very strongly united, and calls this whole the linguistic "sign", where the sound-image is the signified and the concept the signifier

The bond between the signified and the signifier is arbitrary, i.e. it arises because of the arbitrary association of the particular signifier with the particular signified.

de Saussure says that these two are very strongly united, and calls this whole the linguistic "sign", where the sound-image is the signified and the concept the signifier

The bond between the signified and the signifier is arbitrary, i.e. it arises because of the arbitrary association of the particular signifier with the particular signified.

1. Chinese Room Argument:

Challenges the core assumption of symbolic AI that a symbol system able to generate behavior indistinguishable from that of a person must have a mind.

2. Chinese/Chinese dictionary-go-round:

It argues that you cannot learn chinese just by having chinese dictionary as it contains a group of symbols representing another set of groups of symbols.

Human brain has the capacity to discriminate and identify. Consider the symbol "horse." We are able to tell individual horses apart, and even judge the similarity of two horses. This is called "discrimination." On the other hand, if we see a horse and can reliably call it a "horse", we are doing "identification."

Cognitive processes involve:

Iconic - the perceptual and sensory part

Categorical - distinguishing some unique features

Symbolic - it makes possible that the grounded set of elementary symbols when combined in different combinations, produce intrinsic grounding of that newly formed set.

Iconic and Categorical representations do the identification and discrimination. Both iconic and categorical representations are nonsymbolic. The former are just the similar copies of the sensory projection. They preserve the exact shape of the element that is percieved. The latter are only the icons that have been selectively filtered to preserve only some of the features of the shape of the sensory projection. These features distinguish members from nonmembers in a given category.

1. Chinese Room Argument:

Challenges the core assumption of symbolic AI that a symbol system able to generate behavior indistinguishable from that of a person must have a mind.

2. Chinese/Chinese dictionary-go-round:

It argues that you cannot learn chinese just by having chinese dictionary as it contains a group of symbols representing another set of groups of symbols.

Human brain has the capacity to discriminate and identify. Consider the symbol "horse." We are able to tell individual horses apart, and even judge the similarity of two horses. This is called "discrimination." On the other hand, if we see a horse and can reliably call it a "horse", we are doing "identification."

Cognitive processes involve:

Iconic - the perceptual and sensory part

Categorical - distinguishing some unique features

Symbolic - it makes possible that the grounded set of elementary symbols when combined in different combinations, produce intrinsic grounding of that newly formed set.

Iconic and Categorical representations do the identification and discrimination. Both iconic and categorical representations are nonsymbolic. The former are just the similar copies of the sensory projection. They preserve the exact shape of the element that is percieved. The latter are only the icons that have been selectively filtered to preserve only some of the features of the shape of the sensory projection. These features distinguish members from nonmembers in a given category.