Homework 3

How do Systems Learn??

Matlab Code for the regression analysis: regression.m

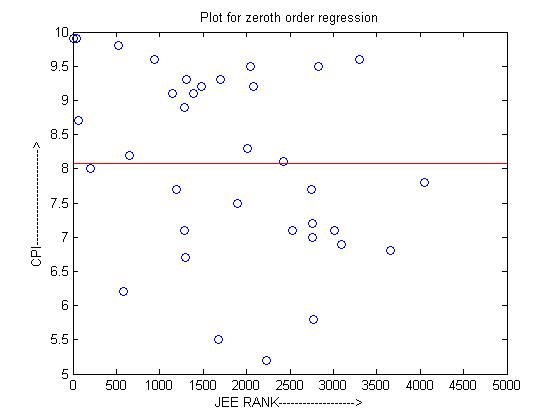

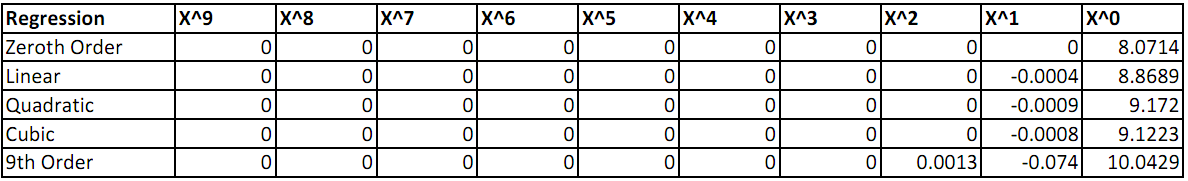

The equation is CPI = 8.0714 and the residual error = 2.0301e-014

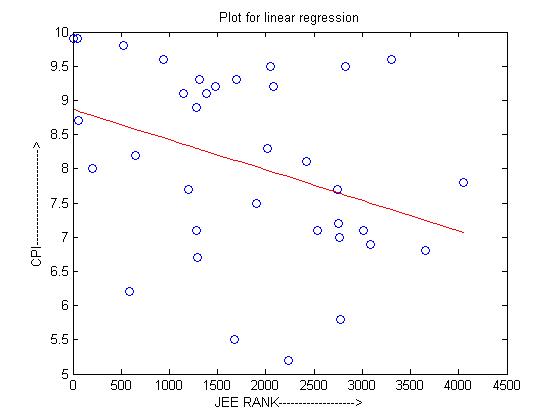

The equation is CPI = -0.0004*(JEE RANK) + 8.8689 and the residual error = -2.2585e-015

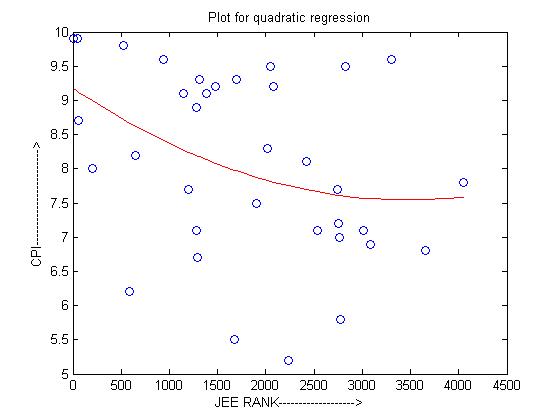

The equation is CPI = 0.0000*(JEE RANK)^2 - 0.0009*(JEE RANK) + 9.1720 and the residual error = -1.2942e-015

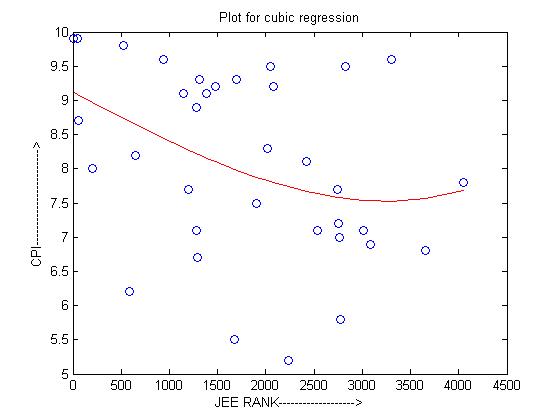

The equation is CPI = 0.0000*(JEE RANK)^3 + 0.0000*(JEE RANK)^2 - 0.0008*(JEE RANK) + 9.1223 and the residual error = 5.5828e-016

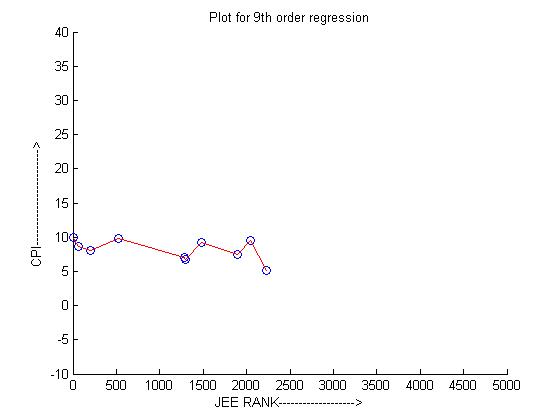

The equation is CPI = -0.0000*(JEE RANK)^9 + 0.0000*(JEE RANK)^8 - 0.0000*(JEE RANK)^7 + 0.0000*(JEE RANK)^6 - 0.0000*(JEE RANK)^5 + 0.0000*(JEE RANK)^4 - 0.0000*(JEE RANK)^3 + 0.0013*(JEE RANK)^2 - 0.0740*(JEE RANK) + 10.0429 and the residual error = 4.6097e-010

The tabular representation of the coefficients is as follows

As can be observed, as the degree of the polynomial increases, the absolute value of the coefficients also increases.

When we consider the case of CPI being a function of JEE Rank and Age, we get the following equations and residues:-

(I) Linear:- CPI = 10.0467 -0.0005*(JEE RANK) -0.0578*(AGE) and the residual error is 2.7914e-016.

(II) Quadratic :- The error here is also of the order of e-016

The two multivariate cases show a very less improvement in the error.

Matlab Code for the probability and entropy calculations: entropy.m

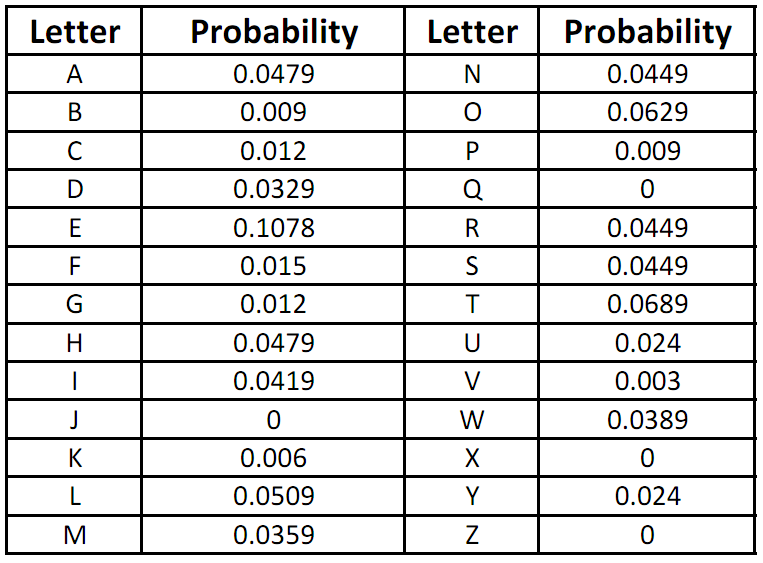

(a) Based on the frequency of the letters in the given text, the probability distribution is as follows

(b) The Entropy for the data is 3.5067

(c) The following text was used for verifying the distribution and entropy:

"Protests swelled across the nation on Wednesday in support of Gandhian Anna Hazare's fast-unto-death in Tihar Jail. The 74-year-old Anna fasted on Wednesday as thousands of his followers gathered outside the jail, the latest development in a crisis that saw him arrested on Tuesday and then refuse to leave jail after the government ordered his release. Thousands of Anna supporters on Wednesday also took out a march from India Gate to Jantar Mantar to express solidarity with the Gandhian in his fight against corruption. Protesters, some of them dressed in T-shirts and Gandhi caps with slogans "I am Anna", gathered at the war memorial at around 4 pm and began marching towards Jantar Mantar, where Hazare had sat on a fast in April which forced the government to expedite the introduction of the Lokpal Bill in Parliament."

The Entropy came out to be = 3.5086 which is nearly equal to the last case

SUMIT VERMA| Y9605 | SE367