Evaluation of a Low-Cost Open-Source Gaze Tracker

JAVIER SAN AGUSTIN, HENRIK SKOVSGAARD , MARIA BARRET, MARTIN TALL, DAN WITZNERProceedings of the 2010 symposium on Eye tracking research & applications, ACM , Austin TX

Paper Review

Download as pdfWhy "Eye Tracking" ?

With cities getting smarter and services automated, human-computer interaction is an indispensable aspect of everyday life. As most popular medium of interaction are keyboard and mouse, modern software interfaces are designed to work with hands. Thus, these interfaces are rendered unusable to people with hand disabilities. In this paper review, we discuss intrusive and another non-intrusive realtime interaction technologies, which rely on eye and face movements to control a mouse cursor. Using 'off-the shelf' hardware, open source libraries and a normal processor, real time eye-cursor control is achieved in these papers. Although commercial eye trackers are available in market, they are not very practical for using at home due to either high costs(x000$) or difficult setup procedures. Thus, we need to explore systems which use cheap components easily available in market such as webcams, IR leds, goggles and have open source software allowing any user to calibrate/implement his own system.

|

|

|

| All images shown above are from [1] | Images shown above are not copied from anywhere |

|---|

Evaluation of a tracker

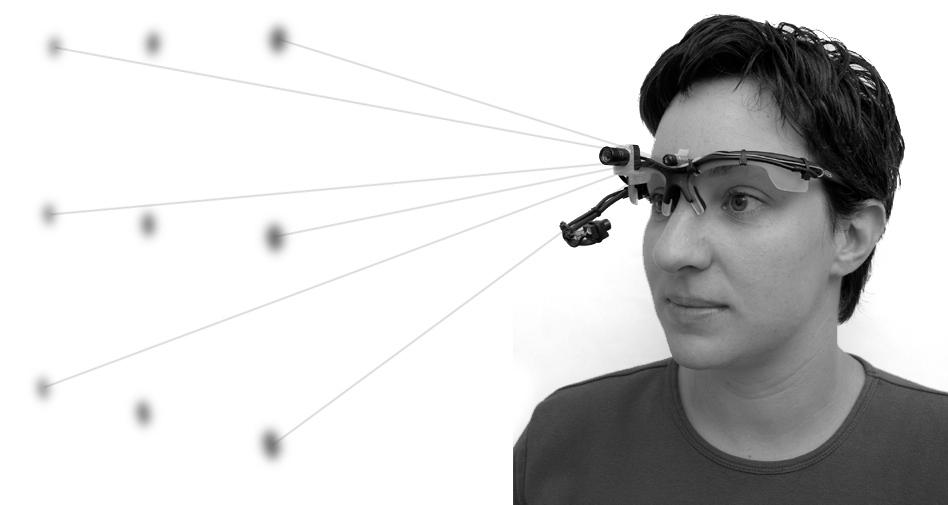

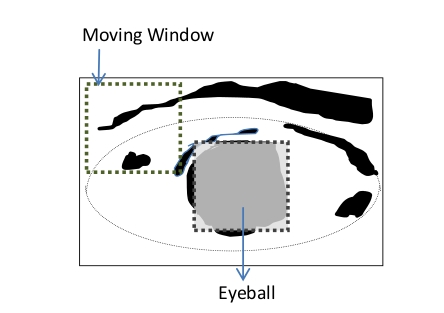

Many open source eye trackers are available with different specialities. For example ITU gaze tracker [1] is an offline system which packs all processing equipment into a backpack allowing the user to carry system anywhere. This system can be utilized to identify gaze patterns in scenarios such as driving, buying in market or painting. Hence it can be used for researches aiming to classify changes in patterns with learning. For example, an experiment in [4] , where a novice and experienced driver drive a car, came to the conclusion, that as learning progresses in driving, gaze moves towards empty spaces on road, whereas a novice driver looks for cars. In 2009 "ITU gaze tracker", an open source low cost system was released in Spain. This intrusive system consists of a head mounted webcam with inbuilt Infrared emitters. In [2] authors rebuilt the system and test it on two different typing applications(Gaze Talk and Star Gazer).

Aim of experiment was to present a system:

- Which can work in generic situations such as walking or placing display at other location in visible range or in darkness with illumination from IR source only

- Low-cost , easy to build from off the shelf components

- Should give reasonably accurate results for atleast one gaze typing system

Overall study concluded that a major problem which cause loss in accuracy was movement of head and a head pose invariant system would be more robust. Also a speed of 6.56 words per minute can be achieved using ITU's gaze tracker which was comparable to a study on commercial systems where 6.26 WPM was achieved, but not comparable so some others where as much as 15 WPM has been achieved. Then a case study of person in late stages of ALS was conducted in which 'one word' per minute rate was achieved.

API to interface trackers with software : Another problem in adaption of gaze tracking technology is absence of standard API's which allow communication between gaze trackers and softwares. Till now most gaze trackers have their own custom built softwares or vice versa. In [3] a standard API is presented which abstracts the communication layer and provides sockets for various control parameters such as Left POG, Right POG, Screen Size , Camera size and handles for calibration and configuration. Although this API does not improve speed of gaze tracking in anyway, but it makes writing applications utilizing open gaze trackers easier thus allowing for faster adoption in development communities. The API operates on a client-server model where Tracker acts as a server and applications as client. This allows many applications to simultaneously utilize the data from tracker's transmission.

ConclusionAs movement of cursor with head pose and eye positions can be more accurately learnt by a person as compared to a machine, cursor control will improve with learning. But this will still not solve the problem of determining gaze because head-pose variation will cause noise resulting in inaccurate POG's. Hence system will work for interactive purposes but not for research as in [4]. Thus, though gaze tracking can be the future mode of interaction among humans and computers it still lacks features such as accuracy and generality.

References

[1] BABCOCK, J. S., AND PELZ, J. B. 2004. Building a lightweight eyetracking headgear. In Proceedings of the 2004 symposium on Eye tracking research & applications, ACM, San Antonio, Texas, 109-114.[2] JAVIER SAN AGUSTIN, HENRIK SKOVSGAARD , MARIA BARRET, MARTIN TALL, DAN WITZNER, Evaluation of a Low-Cost Open-Source Gaze Tracker ,Proceedings of the 2010 symposium on Eye tracking research & applications, ACM , Austin TX

[3] CRAIG HENNESSEY, ANDREW T. DUCHOWSKIY, Expanding the Adoption of Eye-gaze in Everyday Applications, Proceedings of the 2010 symposium on Eye tracking research & applications, ACM , Austin TX

[4] Cohen, A. S. (1983). Informationsaufnahme beim Befahren von Kurven, Psychologie für die Praxis 2/83, Bulletin der Schweizerischen Stiftung für Angewandte Psychologie