Unsupervised Correlation of Facial Expressions with Language

Mentor : Prof. Amitabh Mukherjee

Abhishek Kar

Introduction

Facial expressions and their varied interpretations have long intrigued a large chunk of the scientific community, be it psychologists, neuroscientists or computer scientists. The interpretation of facial expressions and the factors that affect it have been studied extensively. Language has been found to be one of the main factors affecting the characterization of expressions alongside culture, context and personal experiences. It can be argued that language aids in understanding the emotion conveyed by a facial expression in finer detail by providing different phrases/words for emotions of different intensity. For example, an angry person maybe be described by an observer as "annoyed", "pissed off", "fed up" etc. In this project, I propose to build a hybrid system based on automatic recognition of Facial Action Units and correlating them with human commentary on videos depicting the expression to obtain a fine grained expression classification system.

Motivation and Previous Work

All previous systems for classifying emotions have concentrated on the six basic expressions - happiness, anger, fear, surprise, disgust and sadness . There have been many approaches to categorize facial images into different expressions. Both model based and image based approaches have been used [1] where the face has either been modelled as a deformable model (e.g. Active Appearance Models [2] and Active Shape Models) or transformed into feature space by a variety of filters (e.g. Gabor Filters). Classifiers (e.g. LDA & SVM) are subsequently trained on these features and given a new image a forced choice decision is made to recognize the expression in the image. Some methods also use video data and extract motion cues (e.g. optical flow vectors) to classify emotions. There have been some unsupervised approaches to classifying expressions [3] too but there accuracy is still not at par with the supervised approaches. But as mentioned above, all these methods classify expressions into broad categories and provide no information about the intensity.

The motivation to use language as the second cue to categorize emotions comes from the extensive literature support language as a context for emotion classification. There have been studies that have shown an asymmetry between expression recognition accuracy in different languages[4]. It is a known fact that words ground category acquisition and this might even be true for emotion categories. General language proficiency and exposure to emotion words in conversation play a key role in helping children develop an understanding of mental states, such as emotions, and allows them to attribute emotion to other people on the basis of situational cues. It has also been seen that children tend to associate a picture depicting an angry person better with the word "angry" than with another picture of an angry person. Moreover it has been seen that failure to provide options while categorizing expressions hampers the accuracy[5]. The proof for a categorical linguistic system for expressions has also been established in a number of works[6]. All the above makes the possibility of fusing visual categorization data with language very interesting and worth exploring.

Methodology

Development of an image-based classification system

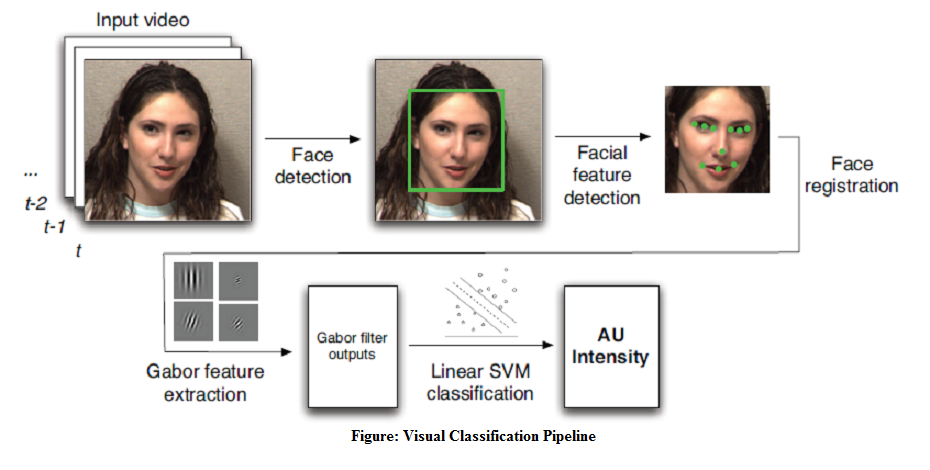

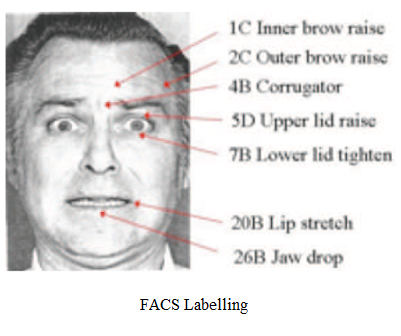

The visual classification system is inspired by the work of Littlewort et al.[7] and Bartlett et al. [8]. In this approach, a face is first detected in the given image using the Viola Jones face detector. Then the face area is cropped out, resized and converted into its Gabor magnitude representation. This is done by convolving 72 (8 directions and 9 spatial frequncies) gabor filters. Then the best features for classification are chosen by Adaboost. Finally 46 SVMs are trained corresponding to each facial action unit in the Facial Action Coding System (FACS). FACS aims at encoding facial features using a combination of elementary facial actions (Action Units) which are closely related to individual muscle actions and even electrical signals in the brain during performance of the action. So given a new image we can obtain with a given accuracy which action units are present in the image.

Correlating human commentary with expression videos

The next step would be to obtain human commentary on videos made from the images in the dataset. All the videos show a face going from a neutral emotion to a peak emotion. The subject would be shown a couple of such videos in the beginning and then asked to comment on the next. No instruction would be given to the subject about what to speak. He/she would just be asked to describe what he/she sees in the video in a particular language. The commentary would then be transcribed into text and tokenized. Irrelevant information/common words/articles would be discarded and I would try to find words characterizing emotions. Based on the adjectives/phrases in the commentary, an emotion intensity rating would be assigned to the image. After gathering sufficient number of examples, I would try to hierarchically cluster the different images based on the action units present. Given a new image, the action units in the image can be computed and based on which cluster it is closest to, a particular emotion and intensity rating can be assigned to it.

Dataset

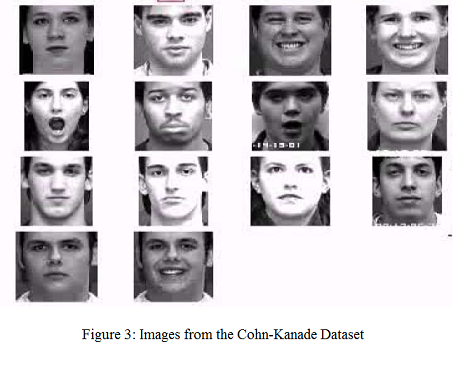

The dataset that will be used in the project is the Extended Cohn Kanade dataset [9]. It contains 593 sequences from 123 subjects with each sequence varying from 10 to 60 frames. Most sequences are posed but it does contain some non posed sequences too. Each sequence goes from a neutral expression to the peak expression. Each peak image is FACS coded and verified by two FACS coders. The dataset considers 7 emotions - Anger, Contempt, Disgust, Fear, Happy, Sadness and Surprise. An emotion label is given to 327 of the 593 sequences based on FACS coding of the emotions and some more relaxing criteria. In addition to the above, it also contains AAM[2] tracking data for all images.

Conclusion & Side Experiments

Conclusion & Side Experiments

This project is primarily aimed at developing a fine grained expression classification system. In addition to using this system to classify images, we can also try to answer some interesting questions. For example, it will be interesting to note how an image between happy and sad is classified. A measure of accuracy for the system would be its agreement with the established codes for different emotions in the FACS. The classification would also give the instensity of each AU. This can be used to regression analysis rather than discrete clustering. We can also try to obtain commentary from bilinguals in two different languages and try to compare which language helps better classifying expressions. Studies have shown that English does help in better categorization[4]. This experiment can even perhaps help us determine which language is more "expression friendly".

References :

- B. Fasel, Juergen Luettin [2003]

Automatic facial expression analysis: A Survey

Pattern Recognition - Cootes, T.F., Edwards, G.J., Taylor, C.J., [2001]

Active Appearance Models

IEEE Transactions on Pattern Analysis and Machine Intelligence - Feng Zhou and De la Torre, F. and Cohn, J.F. [2010]

Unsupervised discovery of facial events

IEEE Conference on Computer Vision and Pattern Recognition (CVPR) - David Matsumoto, Manish Assar [1992]

The effects of language on judgments of universal facial expressions of emotion

Journal of Nonverbal Behavior - Lisa Feldman Barrett, Kristen A. Lindquist, Maria Gendron [2007]

Language as context for the perception of emotion

Trends in Cognitive Sciences - Debi Roberson, Ljubica Damjanovic and Mariko Kikutani [2010]

Show and Tell: The Role of Language in Categorizing Facial Expression of Emotion

Journal - Emotion Review - Littlewort, G.; Whitehill, J.; Tingfan Wu; Fasel, I.; Frank, M.; Movellan, J.; Bartlett, M [2011]

The computer expression recognition toolbox (CERT)

IEEE International Conference of Automatic Face & Gesture Recognition and Workshops - Marian Stewart Bartlett, Gwen Littlewort, Mark Frank, Claudia Lainscsek, Ian Fasel, Javier Movella [2005]

Recognizing Facial Expression: Machine Learning and Application to Spontaneous Behavior

IEEE Conference on Computer Vision and Pattern Recognition (CVPR) - Lucey, P.; Cohn, J.F.; Kanade, T.; Saragih, J.; Ambadar, Z.; Matthews, I [2010]

The Extended Cohn-Kanade Dataset (CK+): A complete dataset for action unit and emotion-specified expression

IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW) - Paul Ekman [1992]

Facial Expression and Emotion

Journal - American Psychologist