The Role of Language in Facial Expression of Emotion

Mentor : Prof. Amitabh Mukherjee

Abhishek Kar

Facial expressions and their varied interpretations have long intrigued a large chunk of the scientific community, be it psychologists, neuroscientists or computer scientists. Even after 45 years of extensive research in facial expressions and emotion, after Paul Ekman proposed his disruptive theory of universals in expression, emotion research continues to be a very hot topic in the scientific community. A major challenge seems to be to reconcile the "apparently universal nature of expression production with the evident individual variation in interpretation of the same expressions".

A number of experiments support the hypothesis of their being six basic expressions - happiness, anger, fear, surprise, disgust and sadness being hardwired into the brain and evolutionary processes causing emotion categories to be associated with them. For example, experiments on blind subjects have revealed spontaneous facial expressions in happy and sad scenarios. In 1984, Levy showed that even though Tahitians lack a word for sadness in their language, they displayed sad expressions when faced with loss.  There have been countless retorts to Ekman's theory of universals in expression. A number of cross-cultural studies have shown differences in response to facial expressions by a member of the same community as opposed to one of another community. For example, in an experiment conducted with black and white students, both groups responded most to the facial expressions of individuals of their own race. Such experiments have been replicated for Americans and Japanese and even remote areas in New Guinea and Tahiti with tribes far away from modern civilization. Inspite of these differences, it has to be noticed that there has been no instance in which more than 70% of once cultural group have termed it as one emotion and a similar fraction of the other community have termed it to be another. In fact, Izard showed that there was maximum agreement across cultures when they were allowed to use their own words to describe the feelings depicted in the expressions.

There have been countless retorts to Ekman's theory of universals in expression. A number of cross-cultural studies have shown differences in response to facial expressions by a member of the same community as opposed to one of another community. For example, in an experiment conducted with black and white students, both groups responded most to the facial expressions of individuals of their own race. Such experiments have been replicated for Americans and Japanese and even remote areas in New Guinea and Tahiti with tribes far away from modern civilization. Inspite of these differences, it has to be noticed that there has been no instance in which more than 70% of once cultural group have termed it as one emotion and a similar fraction of the other community have termed it to be another. In fact, Izard showed that there was maximum agreement across cultures when they were allowed to use their own words to describe the feelings depicted in the expressions.

In order to reconcile cultural variations with universals, culture specific display rules were proposed that could modify core affect programs in the brain based on stylistic differences across cultures creating "cultural dialects" in the language of expressions. A problem with this approach is that it doesn't account for individual differences in expression perception. Personal experiences have been shown to affect the interpretation of expressions. Temperamental differences attributed to genetic markers also bring about such an effect. In a nutshell, all the above observations make finding a general theory of expressions and emotions a pretty daunting task.

In this paper, the authors propose a parallel framework for recognition of expressions - a verbally mediated categorical system that helps in classifying expressions into broad categories and a non-categorical perceptual system that helps in making fine grained discrominations in expressions. The motivation behind the idea of verbal labels affecting expression interpretaion comes from studies that show analogous patterns between categorical perception of color and facial expressions there being a lot of evidence of language affecting categorization in the color domain. A simple categorical system based on language would not be enough to explain how humans are able to differentiate a 'more' happy person from a 'less' happy person. This kind of fine grained discrimination calls for a trained perceptual system.

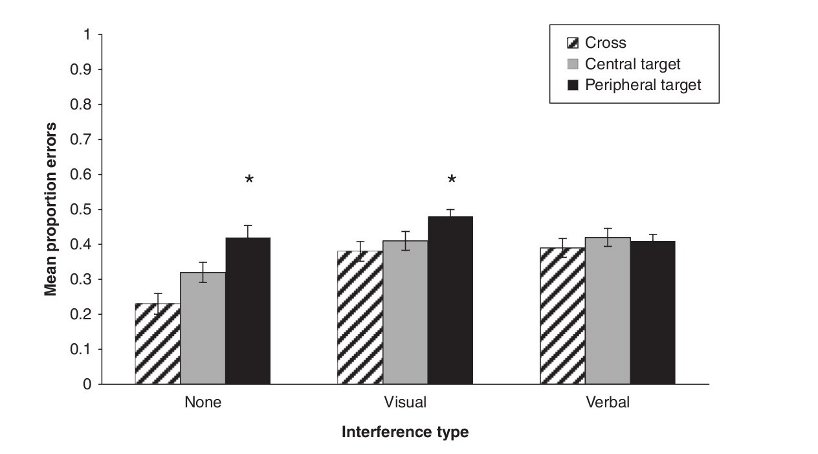

An experiment conducted by Roberson et al. seems to support the above claim. In the experiment, subjects were shown a test expression in the beginning and two target expressions after a delay of 5-10 seconds and asked to identify which of the target emotions matched the test emotion more closely. In the inter stimulus interval, they were either shown pictures of other facial expressions (visual interference) or asked to read aloud words displayed on the screen (verbal interference). It turned out that verbal interference hampered the accuracy of answers more than visual interference. A detailed analysis of the exercise when the test and target images belonged to the same category revealed that perception data might actually be used for fine differentiation of emotions.

Figure: Results of the experiment (Roberson et al. 2007)

The authors further argue their point out by mentioning that our decisions are typically delayed when we are asked to judge expressions belonging to the same category but of different intensities. They attribute it to the conflict between the linguistic and perceptual systems. Autistic children also show a deficit in classifying expressions that they attribute to delayed or deviant language acquisition. They also argue that the linguistic system develops earlier than the visual system as it takes time to master. By integrating linguistic and perceptual angles to expression recognition, the authors have tried to take a step in the direction towards a general theory of facial expression production and recognition. A research problem for the future would be to find neurological evidence for the authors' argument and try to further reconcile the theories of what is universal and what is different, what is innate and what is learnt.

References :

- Debi Roberson, Ljubica Damjanovic and Mariko Kikutani [2010]

Show and Tell: The Role of Language in Categorizing Facial Expression of Emotion

Journal - Emotion Review - Paul Ekman [1992]

Facial Expression and Emotion

Journal - American Psychologist - Roberson, Debi and Davidoff, Jules [2000]

The categorical perception of colors and facial expressions: The effect of verbal interference

Journal - Memory & Cognition