| METHODOLOGY

The

methodology I used for hand tracking

in real time is the following :-

The image sequences with the

user moving his hands are captured and the image processing method described

below is applied to all these images.

BINARIZING THE IMAGE

- The images are binarized using an appropriate threshold value. This removes

a lot of background noise .

SMOOTHING AND OPENING

- The binarized image is smoothened using a standard gaussian convolution

matrix . Opening consists of looking upon the neighbouring pixels and opening

that pixel . It is actually erosion followed by dilation. Though these

convolution operations effectively removes a lot of noise, they are time

taking. For many backgrounds (like the black background I am using

) these are not necessary and hence I am leaving this option to the user.

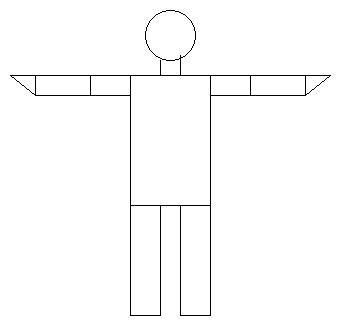

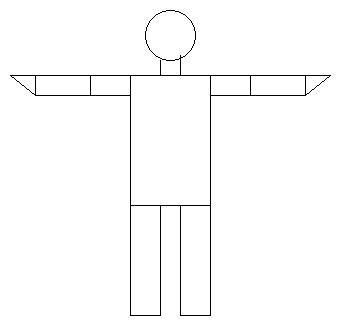

CALIBRATION:-

Initial pose of the user

In the beginning the user is

made to stand with his arms wide apart. This position is used for calibration

i.e. finding out user's shoulder tip position , armlength and forearm

length. I am doing this in the following way. By scanning from two extreme

ends of the image , I try to locate the first few pixels of high

intensity. Since the background noise has been eliminated , and the user

is standing with his arms wide apart hence these pixels correspond to those

of user's wrists. Using this therefore , I get the x coordinates of the

wrist. Now the x coords are used to locate the y coords in the following

way. Consider a strip of small width having the above as x coords. In this

strip I locate the high intensity pixels and they give the y coord of the

wrist. Shoulder strip is located using statistical data about human body

distances.

Now arm length is found using

above data. The calibration is now complete.

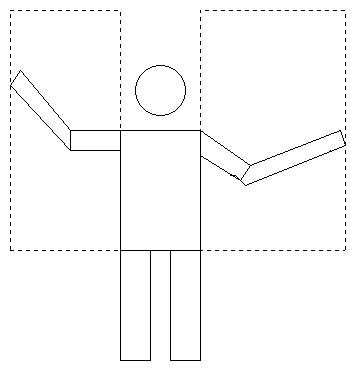

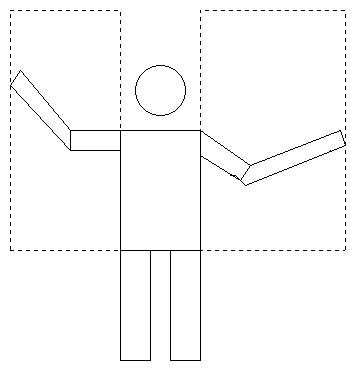

TRACKING HAND :-

Hand tracking is done in the

following way. The extreme tips are located using the above procedure.

This might either correspond to the user's wrist or the elbow. Since I

already know the armlength , forearm length using statistical data, I calculate

the distance of the located tip from the shoulder and compare it with upper

arm length. Now there are two cases that can arise :-

Case 1: The distance of

the located tip is greater than upperarm length. In this case the located

tip is the wrist. I use geometry to locate the elbow joint and using some

image processing I resolve any ambiguity that may arise.

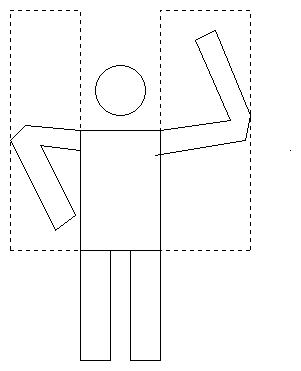

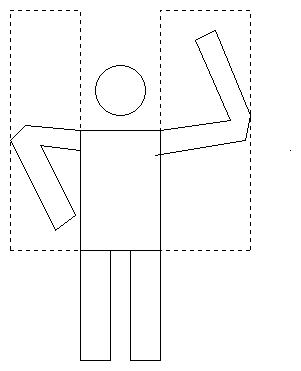

Case 2: The distance of

the located tip is lesser than upperarm length. In this case the located

tip is the elbow joint. This also means that arm is folded. The wrist is

now found out by looking both above and below the line joining the wrist

and the shoulder tip. This gives me the wrist position since I am not allowing

occlusion.

After location of wrist and elbow

joint , the data is fed to graphics part. Following is an example image.

GRAPHICS OUTPUT

I

had initially planned to give a much better graphics output than the one

at present. But due to some implementation problems ( shortage of memory

on host system ) I had to discard my idea. My initial plan was to do animation

using dancer images ( or drawings) with arms in various positions . But

this had a problem ; since the number of such images is limited , the continuous

data output from the image processing part had to be dicretised. In the

present case this problem is not there since the images are constructed

according to the output of the image processing part.

|