|

|

Satyendra Kumar Gupta (satyend@iitk.ac.in)

S.Prashanth Aditya (aditya@iitk.ac.in)

IIT Kanpur: April 2000

There is something we would like to point out at this stage. So far, we were working with a particular algorithm for the segmentation which basically works the same way Local Histogramming HBP works (though we didn't know it until very very recently!), the difference being that instead of comparing a pixel in the target region with a group of pixels which make up the model image, we used to compare each individual pixel with a value which we arrived at through some experimentation. So our algorithm was not very robust, and importantly, we couldn't do much about the noise we got due to scanning.

HBP determines the most likely spatial position of a given histogram

in an image. According to John Smith, it is defined as "a class of algorithms

designed to detect within images the feature histograms that are similar

to a given model histogram".

Combined with what is known as the Visual Feature Library (VFL), HBP

constitutes a powerful solution for extracting regions from a large collection

of unconstrained images.

HBP consists of four steps: back-projection, smoothing, thresholding

and detection. The Local Histogramming method combines the smoothing and

back-projection steps. It works as follows: For each point in the target

image a local neighbourhood histogram is computed and its distance to the

model histogram determines whether each image point belongs to the model.

(The model image provides the model histogram. The model images constitute

the VFL.)

The concept of "distance" between histograms is simple to understand.

If there are two histograms (considered points in a histogram space), there

is a number called the distance between them which is non-zero if they

are different and zero if they are the same. The distance also follows

the rules of commutativity and symmetry as well as the triangle inequality

(given histograms 1,2 and 3 D(1,2)+D(2,3)>=D(1,3)).

There are many ways to calculate the distance. Anything is valid as

long as it conforms to the above laws. We use the Minkowski-form distance,

defined as follows: Let![]() and

and![]() be the query

and target histograms, respectively, then

be the query

and target histograms, respectively, then

![]()

, where r could be 1 or 2. We choose r = 1 - essentially we get the

same results.

John Smith also presented two efficient strategies for the computation

of the histogram queries. The first, query optimized distance (QOD) computation,

prioritizes the elements of the computation in the order of largest significance

to the query. By prioritizing the computation in this way, the query is

computed more efficiently. Furthermore, by stopping the computation process

to only approximate the actual histogram distances, the query response-time

is reduced dramatically without significantly decreasing the retrieval

effectiveness.

As far as the histograms are concerned, the range of 256 intensities

is divided into 8 bins of 32 each. The bins are then normalised according

to the following function: , where again

r = 1 or 2 and we choose r = 1.

, where again

r = 1 or 2 and we choose r = 1.

This is a feature vector representation of discrete-space, two-bin

normalised histograms (with r = 1).

Minkowski form distances compute differences between the same bins,

not even the same kind of bins. As John puts it, in Minkowski form distance

computation, deep red is as different from red as it is from blue.

NOTE:- ALL THESE IMAGES WERE TAKEN FROM JOHN SMITH'S THESIS PAGE.

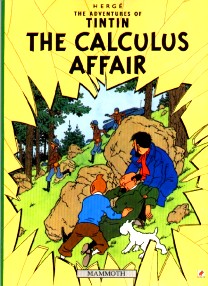

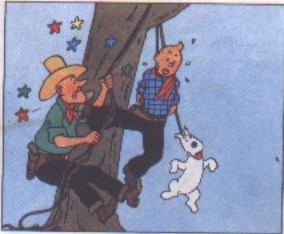

Here, we would like to present a comparison of the algorithm we had

used earlier and this one. The first image that you see is a scanned image

of Tintin - an example of the input our program would accept (in ppm format,

of course). The second image you see is the segmented version of the first

the way we were doing it earlier. The third is the result of the new algorithm.

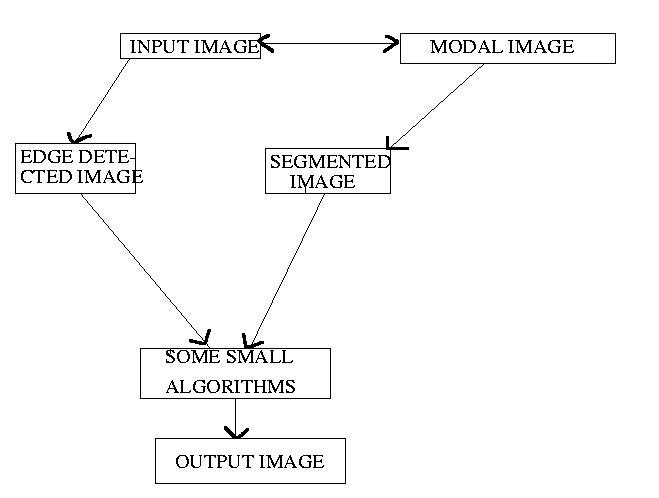

The results page will show better examples of segmented images. These were achieved by using some other

simle algorithms in conjunction with the HBP algorithm. This is schematically represented in the image

shown below these three.

The salient features of our previous algorithm:

The salient features of the new algorithm we have used:

The first thing that strikes as the difference between the two images is that the latter doesn't give as much information as the former in terms of edge-detail. What we essentially get is a rough idea of where in the image we'll get the colours we are looking for. But another thing to be noticed is that noise is completely eliminated in the latter image.

@Article{Syeda:1999,

author = { T F Syeda-Mahmood.},

year = { 1999},

keywords = { IMAGE TEXTURE SEGMENTATION},

institution = { IBM Almaden Research Centre,CA},

journal = { Computer Vision and Image Understanding},

title = { Detecting Perceptually Salient Texture Regions in Images},

month = { October},

volume = { 76},

pages = { 93-108 },

e_mail = { stf@almaden.ibm.com},

annote = {

This paper reports on the techniques and the heuristics applied and used to

group the salient texture regions in the natural scenes.It works out the

possible presence of distinctive pattern localised to some arbitrary

position of any given image.The saliency of the texture region can be

inferred by analyzing the interplay between the bright and dark region

against a gray background. This condition is met by using convolution

like-LOG(Laplacian of Gaussian).One of the problem faced by the

author is the selection of the relevant area to which analysis is to be

applied.The heuristic proposed is to assign weight functions to different

visible pattern and obtain the relevant portion by the MST(minimum

spanning tree) of the region.

An interesting part of the paper is texture segmentation method by

moving window analysis and LP-spectrum difference measure .It first

divide the image into different overlapping window and by series of

iteration it recontructs the missing portion of the boundary fragments,

thus in a way parsing the whole image.

One important aspect of the paper which remained unclear is choice

of weight function based on "psychophysical experimental data",

what I propose is to use use fuzzy logic.Each pattern type can be mapped

to some prototype ,and appropriate parameters can be obtained using

fuzzy laws and principles.Over all the paper is well presented and the

end results are well exemplified

}

@article{Yamada/Watanabe:1999,

author = { Yamada, T. and Watanabe, T.},

year = { 1999},

keywords = { CARTOONS-RECOGNITION EXTRACTION PERSON-SLOPE VECTORIZATION IDENTIFICATION },

institution = { Graduate school of engineering,Nayoya University},

title = { Identification of Person Objects in Four-Scenes Comics of Japanese Newspapers},

booktitle = { Intl. wkshp. on Graphics Recognition,GREC`99},

pages = { 152-159},

email = { {yamada,watanabe}@watanabe.nuie.nagoya-u.ac.jp}

annote = {

This paper describes a method to identify a comic character. The subjects which extract meaningful data

from paper based documents and make it possible to manipulate the extracted data as operative objects in

electronic formed documents are looked upon as interesting and worthy issues, and have been used for various

kinds kind of documents until today. In these type of comics some objects are illustrated in different

scenes, but these shapes and sizes, locations, directions, whole-part relationships, and so on are

tremendously deformed and changed. The analyzing method is based on the distance distribution among

composite elements.

With respect to extraction and identification of person objects, the basic recognition strategy focuses on

the hair area continuously. Here area has been defined as the area of continuously black painted pixels

whose number is more than experimental threshold value. The next processing stage is to extract the areas

related to person objects with respect to the hair areas in the previous stage. In this case it is effective

to determine the searching directions first in order to extract person objects. The characteristic pixels

are looked at in handwriting line segments, which assign some remarkable features to drawn lines in

directions structure and so on.

Using the characteristic pixels, handwriting line based drawings are vectorized. Basically, two

neighbouring characteristic pixels in one handwriting line segment are transformed into one straight

line segment. The final processing in the extraction phase of person objects is to select the vectorized

line segments in the estimated person region and distinguish only reliable line segments as composite

elements of person objects. In the identification phase of the same person object, the objective is to find

out the same person object from four different scenes.

}

@Article{Lai/Chin:1995,

author = { Lai, Kok F. and Chin, Roland T.},

year = { 1995},

keywords = { DEFORMABLE-MODEL FEATURE-EXTRACTION},

institution = { },

title = { Deformable contours: modeling and extraction},

journal = { IEEE transactions on Pattern Analysis and Machine Intelligence},

month = {},

volume = { 17 (11), 10841090},

pages = { 28},

e_mail = {},

annote = {

This paper considers the problem of modeling and extracting arbitrary deformable contours from noisy

images. The authors propose a global contour model based on a stable and regenerative shape matrix,

which is invariant and unique under rigid motions. Combined with Markov random field to model local

deformations, this yields prior distribution that exerts influence over a global model while allowing

for deformations. The authors then cast the problem of extraction into posterior estimation and show

its equivalence to energy minimization of a generalized active contour model. The paper discusses

pertinent issues in shape training, energy minimization, line search strategies, minimax regularization

and initialization by generalozed Hough transform. Finally, experiment results are presented and a

performance comparison vis-a-vis rigid template matching is conducted.

The major problem with this paper is that it uses a few structures and term without really making it clear

to the reader how they are actually calculated. For instance, the concept of variance at a point (with regard

to deformations of the contour) is used throughout the paper. This as such can be intuitively understood by

the reader but there are no details like the points in the image which were considered to calculate this.

This poses a very big problem if we have to implement the algorithms discussed in the paper, which I should say

really good.

- S.P.Aditya, 09/04/2000

}

NOTE:- The CVPR/PAMI/thesis versions [Lai/Chin:1995] can be downloaded

in zipped/normal versions from Cora's

pages.

[ COURSE WEB PAGE ] [ COURSE PROJECTS 2000 (local CC users) ]